Gitlab is brilliant, the official gitlab runner is pretty robust and supports all the best new features of gitlab.

- Gitlab Ci Yaml File

- Python Gitlab Module

- Gitlab Runner Python Code

- Gitlab Runner Python Command Not Found

- Gitlab Runner Python Image

Gitlab runner fails with connection timeout, connectivity works fine. I am trying to create a python library that helps create Gitlab pipelines. In python using models such as Job, Rule etc. You build a pipeline which consequently creates a valid gitlab yaml. A native package called gitlab-ci-multi-runner is available in Debian Stretch. By default, when installing gitlab-runner, that package from the official repositories will have a higher priority. If you want to use our package, you should manually set the source of the package. GitLab Runner can also run inside a Docker container or be deployed into a Kubernetes cluster. View some best practices for how to use and administer GitLab Runner. GitLab Runner versions. For compatibility reasons, the GitLab Runner major.minor version should stay in sync with the GitLab major and minor version. Older runners may still work.

But one thing that poses as a bit of an obstacle for those of us with fairly exotic build environments is that theofficial gitlab runner is written in Go, and Go (or GCC-go) is not available on every platform.

So this project was born to fill this niche where you want to use gitlab on a system that simply can't run the officialgitlab-runner and where the ssh executor wont work. You should be able to a 'shell' executor on any system that canrun git and supports recent python (2.7 or 3.6+)

If the GitLab Runner is run as service on Linux/macOS the daemon logs to syslog. If GitLab Runner is running as a service on Windows, it creates system event logs. To view them, open the Event Viewer (from the Run menu, type eventvwr.msc or search for “Event Viewer”). Then go to Windows Logs Application. The Source for Runner logs is gitlab-runner. Python development guidelines SCSS style guide Shell scripting standards and style guidelines Sidekiq debugging Sidekiq style guide. GitLab Runner officially supported binaries are available for the following architectures: x86, AMD64, ARM64, ARM, s390x, ppc64le.

The runner is in active use on other projects:

- CUnit - https://gitlab.com/cunity/cunit/pipelines

- Windows Docker

- Solaris 11 on AMD64

- Linux Ubuntu 19.04 on Power9

Systems that are intended as targets are:

- Windows + Docker (until official windows docker runner is ready for use)

- Solaris 11 (patches to the official runner exist but are hard to apply)

- HP-UX on Itanium (IA64)

- AIX 6.1, 7.1 on POWER

- Linux on POWER

- x64/x86 Linux (but only for internal testing)

Supported Systems

| Platform | Shell | Docker | Artifact Upload | Artifact download/dependencies |

|---|---|---|---|---|

| Linux (amd64) | yes | yes | yes | yes |

| Linux (power) | yes | maybe | yes | yes |

| Windows 10/2019 | yes | yes | yes | yes |

| Solaris 11 (amd64) | yes | n/a | yes | yes |

| Solaris 11 (sparc) | yes | n/a | yes | yes |

| AIX 7.1 (python3) | yes | n/a | yes | yes |

| AIX 7.2 (python3) | yes | n/a | yes | yes |

| HPUX-11.31 (python3) | yes | n/a | yes | yes |

There really is no sensible reason to use gilab-python-runner on x64 linux other than to test changes to the project.

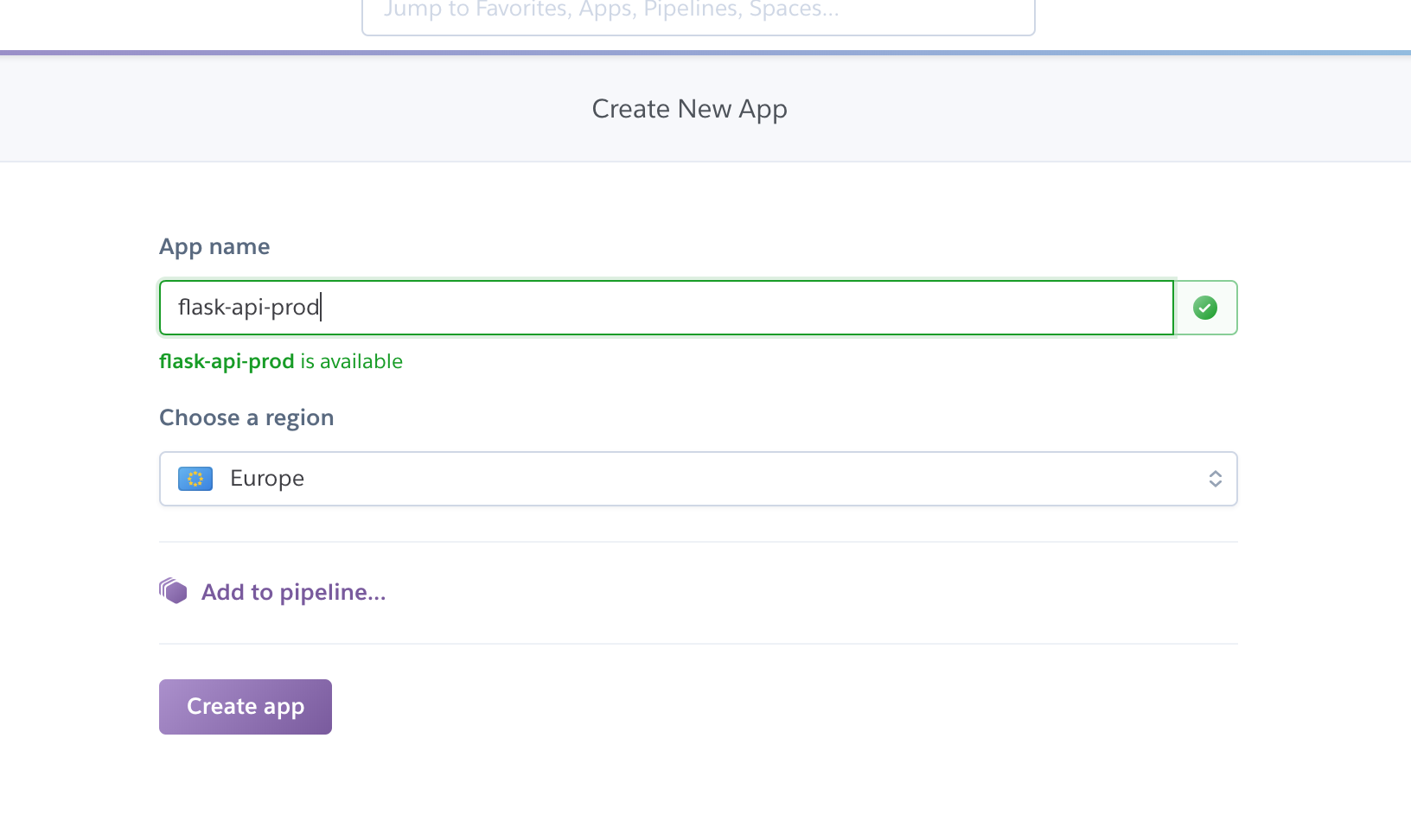

Register a Runner

At the very least you need to know the gitlab server address (eg https://gitlab.com) and the runner registration token(you get this from your runners page on your server, project or group)

This should exit without an error (TODO print more useful feedback) and have created a config file.

This will have created a new runner on your gitlab server with the tag given above by --tag ARG It should also haveset a meaningful description for you.

Starting the Runner

You can decide to start the runner as part of an init script, or within a screen session or maybe as the entrypoint ina docker container. You simply need to point it at the yaml file created by using --register

Which should start to poll the server :

On this page

- Python Guide

- Values

- Linting

- Unit Testing

- Values

Python Guide

It is our collective responsibility to enforce this Style Guide since our chosen linter does not catch everything.

Values

Campsite rule - As these guidelines are themselves a WIP, if you work with any code which does not currently adhere to the style guide update it when you see it.

Linting

We use Black as our linter. We use the default configuration.

There is a manual CI job in the review stage that will lint the entire repo and return a non-zero exit code if files need to be formatted. It is up to both the MR author and the reviewer to make sure that this job passes before the MR is merged. To lint the entire repo, just execute black . from the top of the repo.

Spacing

Following PEP8 we recommend you put blank lines around logical sections of code. When starting a for loop or if/else block, add a new line above the section to give the code some breathing room. Newlines are cheap - brain time is expensive.

Type Hints

All function signatures should contain type hints, including for the return type, even if it is None. This is good documentation and can also be used with mypy for type checking and error checking.

Examples:

Import Order

Imports should follow the PEP8 rules and furthermore should be ordered with any import ... statements coming before from .... import ...

Example:

Docstrings

Docstrings should be used in every single function. Since we are using type hints in the function signature there is no requirement to describe each parameter.Docstrings should use triple double-quotes and use complete sentences with punctuation.

Examples:

How to integrate Environment Variables

To make functions as reusable as possible, it is highly discouraged (unless there is a very good reason) from using environment variables directly in functions (there is an example of this below).Instead, the best practice is to either pass in the variable you want to use specifically or pass all of the environment variables in as a dictionary.This allows you to pass in any dictionary and have it be compatible while also not requiring the variables to being defined at the environment level.

Examples:

Gitlab Ci Yaml File

Package Aliases

We use a few standard aliases for common third-party packages. They are as follows:

import pandas as pdimport numpy as np

Variable Naming Conventions

Adding the type to the name is good self-documenting code.When possible, always use descriptive naming for variables, especially with regards to data type. Here are some examples:

data_dfis a dataframeparams_dictis a dictionaryretries_intis an intbash_command_stris a string

If passing a constant through to a function, name each variable that is being passed so that it is clear what each thing is.

Lastly, try and avoid redundant variable naming.

Examples:

Making your script executable

If your script is not able to be run even though you've just made it, it most likely needs to be executable. Run the following:

For an explanation of chmod 755 read this askubuntu page.

Mutable default function arguments

Using mutable data structures as default arguments in functions can introduce bugs into your code. This is because a new mutable data structure is created once when the function is defined, and the data structure is used in each successive call.

Example:

Output:

Reference: https://docs.python-guide.org/writing/gotchas/

Folder structure for new extracts

- All client specific logic should be stored in /extract, any API Clients which may be reused should be stored in /orchestration

- Pipeline specific operations should be stored in /extract.

- The folder structure in extract should include a file called

extract_{source}_{dataset_name}likeextract_qualtrics_mailingsendsorextract_qualtricsif the script extracts multiple datasets. This script can be considered the main function of the extract, and is the file which gets run as the starting point of the extract DAG.

When not to use Python

Since this style guide is for the entire data team, it is important to remember that there is a time and place for using Python and it is usually outside of the data modeling phase.Stick to SQL for data manipulation tasks where possible.

Python Gitlab Module

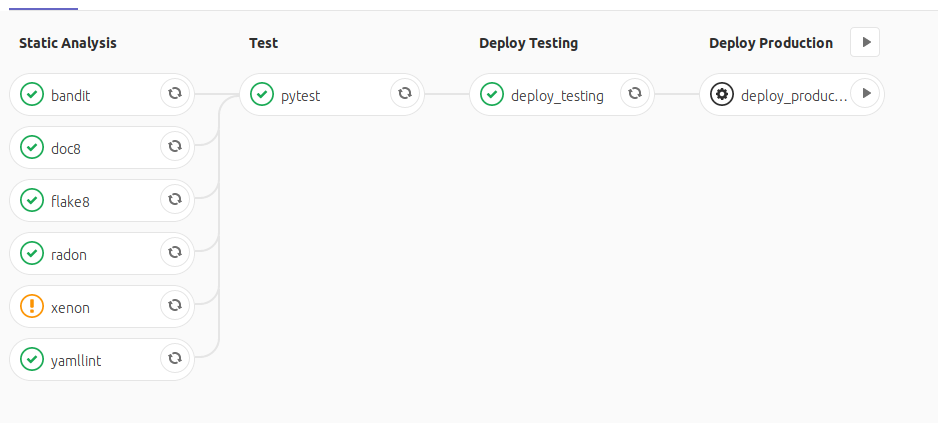

Unit Testing

Pytest is used to run unit tests in the Analytics project. The tests are executed from the root directory of the project with the python_pytest CI pipeline job. The job produces a JUnit report of test results which is then processed by GitLab and displayed on merge requests.

Writing New Tests

New testing file names should follow the pattern test_*.py so they are found by pytest and easily recognizable in the repository. New testing files should be placed in a directory named test. The test directory should share the same parent directory as the file that is being tested.

A testing file consists of one or more tests. An individual test is created by defining a function that has one or many plain Python assert statements. If the asserts are all true, the test passes. If any asserts are false, then the test will fail.

When writing imports, it is important to remember that tests are executed from the root directory. In the future, additional directories may be added to the PythonPath for ease of testing as need allows.

Gitlab Runner Python Code

Exception handling

Gitlab Runner Python Command Not Found

When writing a python class to extract data from an API it is the responsibility of that class to highlight any errors in the API process. Data modelling, source freshness and formatting issues should be highlighted using dbt tests.

Gitlab Runner Python Image

Avoid use of general try/except blocks ie: